Artificial Intelligence (AI) is rapidly transforming many sectors—autonomous vehicles, financial services, healthcare, and beyond. Yet, as AI applications become more widespread and complex, new vulnerabilities emerge. Increasingly, AI systems themselves are becoming prime targets for cybercriminals.

Small Changes, Big Impact

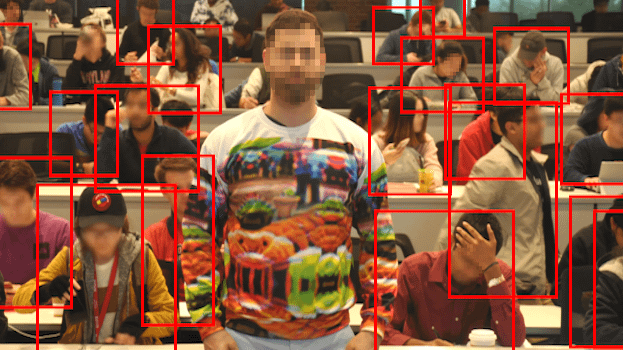

Adversarial attacks involve subtly altering input data to AI systems in ways that are virtually imperceptible to humans, but which cause dramatic misclassifications or wrong decisions. Cybercriminals exploit vulnerabilities by carefully manipulating data inputs, making AI systems behave unpredictably or dangerously.

Consider an autonomous vehicle scenario: minor, seemingly insignificant stickers or markings on a traffic sign can cause the car’s AI-based image recognition system to misinterpret a "STOP" sign as a speed limit, potentially resulting in serious accidents. Similar techniques could disrupt facial recognition systems, where minor pixel adjustments could make the AI misidentify or fail to recognize a person entirely.

In 2025, the potential for such attacks poses serious threats across industries. For example, medical AI systems might misdiagnose diseases if attackers subtly alter medical imaging data, potentially leading to severe health consequences. Similarly, financial algorithms could be tricked into making incorrect investment decisions by adversarial manipulations of market data.

To counteract these threats, companies must actively build resilience into their AI models. A key defensive technique is adversarial training, where AI systems are deliberately trained using manipulated examples to enhance their robustness against subtle, adversarial inputs. By simulating potential attacks during training, models become more resistant and reliable in real-world scenarios.

Strategic Implications for 2025

-

Increased Regulatory Requirements:

Regulatory authorities are likely to establish guidelines and standards for "secure AI," making compliance mandatory in critical applications. -

Enhanced AI Governance:

Companies will need to introduce strict AI governance frameworks, including security audits and rigorous testing against adversarial scenarios, to ensure their AI systems are robust and reliable. -

Security Awareness & Education:

Businesses must raise awareness of AI-specific risks among employees and decision-makers, emphasizing vigilance and skepticism when AI-driven decisions appear inconsistent or suspicious.

Stay ahead of the wave!

Comments